Retrieval Augmented Generation: Enhancing AI with Contextual Understanding

In the realm of artificial intelligence (AI), Retrieval Augmented Generation (RAG) stands out as a revolutionary approach that bridges the gap between information retrieval and natural language generation. In this comprehensive guide, we delve deep into the intricacies of RAG, exploring its core concepts, applications, benefits, and future prospects.

Understanding RAG: A Fusion of Retrieval and Generation

At its essence, RAG combines elements of natural language generation with retrieval-based models to enhance text generation. Unlike traditional AI models, which rely solely on internal representations of data, RAG incorporates external sources of knowledge to generate more relevant and coherent responses.

Key Concepts and Terminologies

Before diving into the specifics of RAG, let’s familiarize ourselves with some key concepts and terminologies in the field of natural language processing (NLP):

- Natural Language Processing (NLP): The branch of AI concerned with the interaction between computers and human language.

- Information Retrieval: The process of obtaining information from a collection of documents or databases.

- Text Generation: The task of generating coherent and contextually relevant text based on input data or prompts.

- Dialogue Systems: AI systems designed to engage in natural language conversations with users.

- Question Answering: The task of automatically answering questions posed in natural language.

- Summarization: The process of condensing a piece of text while preserving its key information.

- Contextual Understanding: The ability of AI systems to comprehend and interpret the context of a given text or conversation.

- Knowledge Integration: The process of incorporating external knowledge sources into AI models to enhance their performance.

Exploring the Benefits of RAG

1. Enhanced Relevance and Coherence

By leveraging pre-existing knowledge from large datasets or external sources, RAG significantly improves the relevance and coherence of generated text. This approach ensures that AI-generated responses are not only accurate but also contextually appropriate.

2. Context-Aware Responses

RAG models excel in generating context-aware responses, making them ideal for tasks such as dialogue generation and question answering. By considering the context of a conversation or query, RAG can produce more informative and tailored responses.

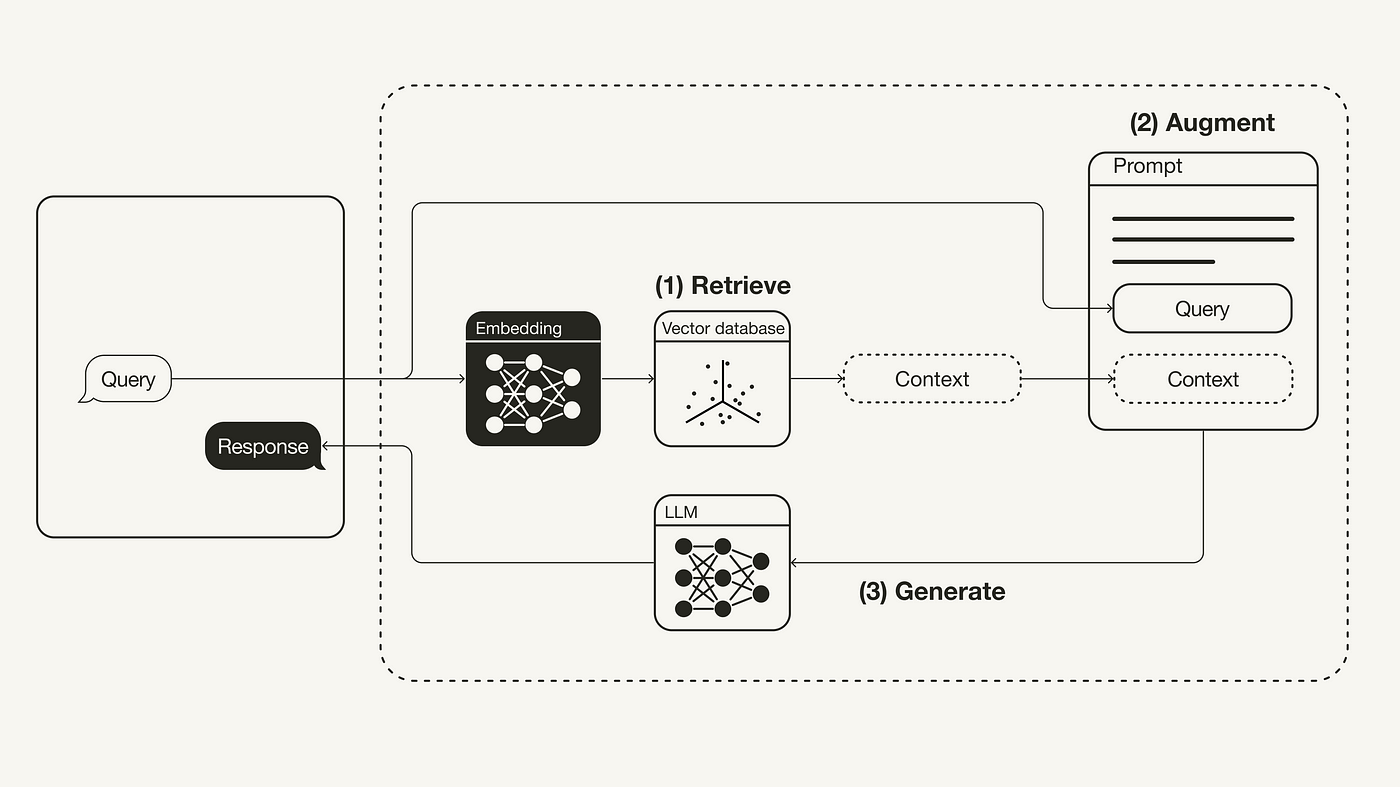

3. Dual-Stage Architectures

Many RAG models employ dual-stage architectures, comprising a retriever and a generator. This two-stage approach allows the model to first retrieve relevant information and then generate coherent responses based on the retrieved data.

Implementing RAG in Real-World Scenarios

Case Study: GitHub Copilot

One notable example of RAG in action is GitHub Copilot, an AI-powered coding assistant developed by GitHub. By integrating RAG techniques, GitHub Copilot can provide developers with contextually relevant code suggestions and documentation snippets, significantly enhancing their coding experience.

Case Study: IBM Watson

IBM Watson, another prominent player in the AI space, has successfully implemented RAG in its internal customer-care chatbots. These chatbots leverage RAG to retrieve relevant information from HR files and company policies, enabling them to provide employees with accurate and personalized assistance.

Overcoming Challenges and Future Directions

While RAG holds immense potential, it is not without its challenges. Some of the key challenges associated with RAG include:

- Data Quality: Ensuring the accuracy and reliability of external knowledge sources.

- Scalability: Scaling RAG techniques to handle large volumes of data and complex queries.

- Ethical Considerations: Addressing ethical concerns related to data privacy and bias in AI-generated responses.

Looking ahead, the future of RAG looks promising, with ongoing research focused on addressing these challenges and further enhancing the capabilities of AI systems.

Conclusion

In conclusion, Retrieval Augmented Generation represents a significant advancement in the field of AI, offering unparalleled capabilities in generating contextually relevant and coherent text. By integrating external knowledge sources into AI models, RAG opens up new possibilities for enhancing dialogue systems, question answering, and text generation tasks. As organizations continue to explore the potential of RAG, we can expect to see further innovations that push the boundaries of AI technology.

Key Takeaways

- RAG combines elements of natural language generation with retrieval-based models to enhance text generation.

- By leveraging external knowledge sources, RAG significantly improves the relevance and coherence of AI-generated responses.

- Dual-stage architectures, context-aware responses, and real-world implementations are key aspects of RAG’s success.

- Challenges such as data quality, scalability, and ethical considerations need to be addressed to fully unlock the potential of RAG.

Frequently Asked Questions (FAQs)

- What is Retrieval Augmented Generation (RAG)? Retrieval Augmented Generation (RAG) is an innovative approach in artificial intelligence that combines elements of natural language generation with retrieval-based models. It enhances the process of text generation by incorporating external sources of knowledge, such as large datasets or databases, to produce more contextually relevant and coherent responses.

- How does RAG improve the quality of AI-generated responses? RAG improves the quality of AI-generated responses by leveraging pre-existing knowledge from external sources. By incorporating relevant information retrieved from databases or other sources, RAG ensures that the generated responses are more accurate, informative, and contextually appropriate.

- What are the main benefits of implementing RAG in AI systems? The main benefits of implementing RAG in AI systems include:

- Enhanced relevance and coherence of generated text.

- Context-aware responses tailored to specific queries or conversations.

- Improved accuracy and informativeness of AI-generated responses.

- Ability to leverage external knowledge sources for more intelligent interactions.

- How does RAG differ from fine-tuning in customizing AI models? RAG differs from fine-tuning in that it focuses on integrating external knowledge sources into AI models, rather than simply adjusting model parameters based on labeled data. While fine-tuning involves optimizing model performance on specific tasks through iterative training, RAG enhances text generation by retrieving and incorporating relevant information from external sources.

- What are the challenges associated with implementing RAG? Some of the challenges associated with implementing RAG include:

- Ensuring the accuracy and reliability of external knowledge sources.

- Scalability issues when dealing with large volumes of data or complex queries.

- Ethical considerations related to data privacy, bias, and fairness in AI-generated responses.

- Can RAG be applied in both open-domain and closed-domain scenarios? Yes, RAG can be applied in both open-domain and closed-domain scenarios. In open-domain scenarios, RAG can retrieve information from a wide range of sources to generate responses to diverse queries. In closed-domain scenarios, RAG can be tailored to specific domains or datasets, enabling more accurate and relevant responses within a confined context.

- What are some real-world examples of organizations using RAG? Some real-world examples of organizations using RAG include:

- GitHub Copilot, an AI-powered coding assistant that utilizes RAG techniques to provide contextually relevant code suggestions.

- IBM Watson, which implements RAG in internal customer-care chatbots to retrieve relevant information from HR files and company policies for personalized assistance.

- How does semantic search enhance RAG workflows? Semantic search enhances RAG workflows by enabling more precise retrieval of relevant information based on the meaning or context of a query. By understanding the semantics of the input text, RAG can retrieve more accurate and contextually relevant data, leading to improved quality of generated responses.

- What are the potential future directions for RAG technology? The potential future directions for RAG technology include:

- Further advancements in semantic understanding and contextual reasoning to enhance the relevance and coherence of generated text.

- Integration of RAG with emerging technologies such as knowledge graphs and deep learning architectures for more intelligent and contextually aware AI systems.

- Exploration of RAG applications in new domains and industries, including healthcare, finance, and education.

- How can organizations leverage RAG to enhance their AI applications? Organizations can leverage RAG to enhance their AI applications by:

- Incorporating RAG techniques into existing chatbots, virtual assistants, and customer service systems to improve the quality and relevance of interactions.

- Utilizing RAG for tasks such as question answering, dialogue generation, and content summarization to provide users with more informative and contextually appropriate responses.

- Investing in research and development to explore new use cases and applications of RAG technology across various domains and industries.